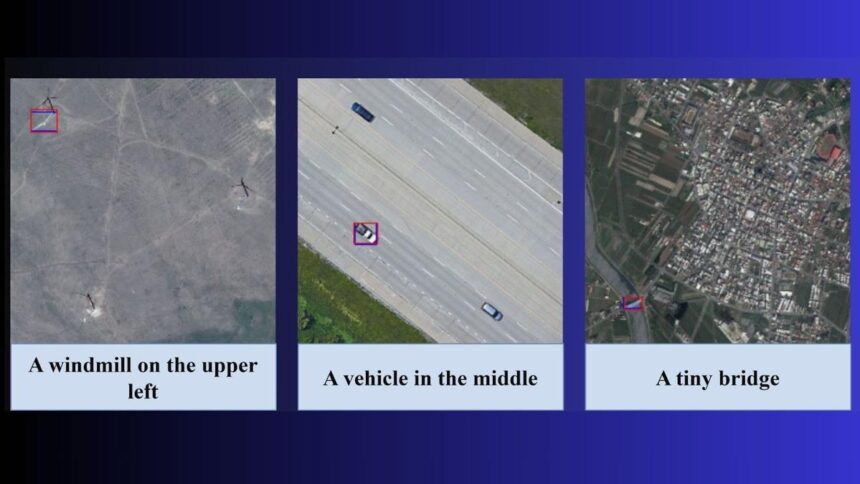

Researchers from the Indian Institute of Technology, Bombay (IIT Bombay) have introduced an innovative artificial intelligence (AI) model, known as Adaptive Modality-guided Visual Grounding (AMVG), that enables machines to interpret satellite and drone images using natural language prompts. This development has the potential to revolutionize fields such as disaster response, surveillance, urban planning, and agriculture.

The project is led by Professor Biplab Banerjee from IIT Bombay’s Centre of Studies in Resources Engineering. According to Shabnam Choudhury, the lead author and PhD researcher, while identifying simple objects like a cat is straightforward for AI, interpreting intricate, high-resolution satellite imagery based on complex language commands has historically been challenging. AMVG aims to close this gap by empowering users to submit prompts such as “find all damaged buildings near the flooded river,” yielding precise outcomes within minutes, even from a vast array of images.

The findings of this research were published in the International Society for Photogrammetry and Remote Sensing’s Journal of Photogrammetry and Remote Sensing. Choudhury noted that AMVG enhances both the speed and accessibility of image analysis for agencies and researchers. “Remote sensing images are rich in detail but extremely challenging to interpret automatically. Existing models struggle with ambiguity and contextual commands,” she added.

AMVG incorporates several innovations, including a Multi-stage Tokenised Encoder and Attention Alignment Loss (AAL), which enhance the model’s object detection accuracy through contextual understanding. AAL functions similarly to a “virtual coach” that directs the system’s focus to relevant image areas based on commands. Choudhury explained, “When a human reads ‘the white truck beside the fuel tank,’ our eyes know where to look. AAL teaches the machine to do the same.”

The research team anticipates diverse applications for AMVG. In disaster management, for instance, agencies could swiftly identify damaged infrastructure following floods or earthquakes. Security forces might use the technology to detect camouflaged vehicles in sensitive regions, while farmers could monitor crop health by prompting the model to highlight yellowing areas.

However, Professor Banerjee pointed out that AMVG has yet to be tested in real-world disaster scenarios. He informed The Hindu that preliminary studies have been conducted, but the lack of comprehensive datasets for disaster management has hampered a full-scale evaluation. Developing such datasets is part of the team’s future initiatives.

According to the researchers, AMVG shows superior performance compared to current methods when identifying damaged structures, concealed vehicles, or crop patterns in complicated terrains, although a broader benchmarking study is still necessary. When asked about the model’s potential to aid governments and NGOs during natural disasters, Banerjee expressed optimism: “Surely. That’s one of the strongest use cases we envision.”

The team is also exploring partnerships to operationalize AMVG. “We have already collaborated with ISRO on similar issues,” Banerjee noted, adding that a new collaboration round with ISRO is forthcoming, with vision-language models likely to be a focus.

AMVG has demonstrated promising results across various imagery acquired from satellites, drones, and aircraft-based sensors. The next research phase will involve deploying the model in diverse geographical and environmental contexts to assess its adaptability.

In a significant advancement for the field, the IIT Bombay team has made the AMVG implementation available as open-source on GitHub, with Choudhury highlighting, “Open-sourcing is still uncommon in remote sensing. We wanted to encourage transparency and accelerate progress.”

Despite its potential, the team recognizes that AMVG has limitations. It currently relies on high-quality annotated datasets and requires further optimization for real-time operation. Researchers are working on sensor-aware versions and compositional grounding techniques to enhance its adaptability to various landscapes.

“Our goal is to create a unified remote sensing understanding system—one that can ground, describe, retrieve, and reason about any image using natural language,” Choudhury stated.