A recent report by the Center for the Study of Organized Hate (CSOH) highlights the increasing use of AI-generated images to propagate Islamophobic content in India. The study investigates how emerging AI tools contribute to disinformation and incite violence against Muslims.

As artificial intelligence (AI) text-to-image technology advances, it has raised concerns globally, with malicious actors utilizing these tools to spread misinformation and target minority groups. Although numerous studies have examined the divisive role of social media in India, the influence of AI-generated images has yet to receive thorough analysis.

Nabiya Khan, a co-researcher of the report, recognizes the proliferation of Islamophobic content on Indian social media as both “a symptom and an amplifier of the risks of AI’s rise.” She pointed out that these tools have made existing prejudices more scalable, faster, and harder to trace, prompting the research initiative.

The report’s focus is particularly relevant given the surge in anti-Muslim sentiment observed over the past decade across media narratives and political discourse. This sentiment has led to severe consequences, including mob lynchings, extrajudicial killings, and the destruction of Muslim-owned properties and worship places.

The research warns that the widespread adoption of AI tools poses “grave implications for India’s religious minorities.” It further asserts that AI content risks further colonizing the already misinformation-ridden Indian information landscape characterized by anti-minority bias and a crisis of credibility.

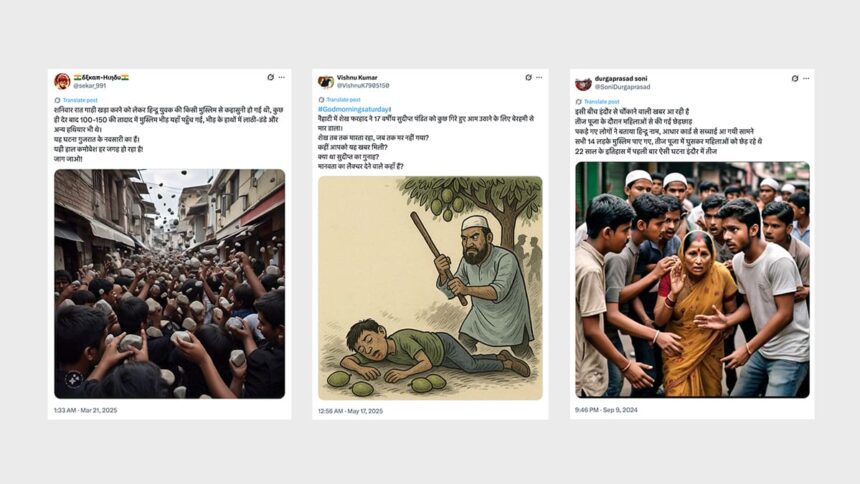

Nabiya noted an alarming trend: “AI-generated images began circulating so quickly, showing hyperrealistic portrayals of Muslim men as violent figures or sexualizing Muslim women. These images were convincing enough to masquerade as news photos.”

The report analyzed 1,326 publicly available AI-generated images from 297 social media accounts, including X (formerly Twitter), Facebook, and Instagram, all of which were identified as having a history of posting hateful content and considerable engagement. The posts observed amassed a total engagement of 27.3 million across these platforms, with Instagram receiving the highest engagement, indicating deficiencies in moderation mechanisms.

A critical finding of the report states that “AI is not creating new hate but automating existing hate.” It explains that anti-Muslim sentiment in India draws extensively on the idea of Muslim criminality, perpetuated through depictions of Muslims as violent and immoral, with AI-generated images reinforcing these stereotypes through visual narratives.

The study uncovered patterns of misinformation twisting incidents to lend a sectarian angle, often linking Muslims to conspiracies or violent events without evidence. For example, the Jalgaon train tragedy, attributed to a mechanical failure, was reported by right-wing news channels as “Rail Jihad,” often accompanied by AI-generated imagery portraying Muslims in threatening contexts.

Furthermore, the report noted significant engagement with sexualized depictions of Muslim women, illustrating how Islamophobia and misogyny coexist, each amplifying the other through AI-generated content.

Spanning two years, the study identified spikes in hateful posts correlating with larger media narratives. The increased availability of AI tools, especially among far-right Hindu nationalists, has facilitated this trend. Platforms like Sudarshan News and OpIndia were found to disseminate hate content generated through generative AI.

The report indicates that current laws inadequately govern or regulate such content, suggesting a need for interventions that combine policy, platform accountability, and public literacy. It calls for transparency from social media platforms regarding their detection and response mechanisms for AI-generated hate.

Despite efforts to report violating posts, the study noted that only one post had been removed out of 187, underscoring the platforms’ inefficiency in enforcing community standards. The rapid growth of AI tools permits the quick spread of hateful content, while ineffective moderation systems exacerbate the issue.

To mitigate these challenges, the report advises platforms to invest in AI-aware moderation systems, enhance synthetic media detection, and apply their rules consistently. Beyond regulations, Nabiya asserts the importance of public awareness, urging individuals to critically evaluate these images and consider who stands to benefit from their acceptance.

Tags: AI-generated images, anti-Muslim hate, India, hate speech, misinformation

Hashtags: #AIgenerated #images #fuel #surge #antiMuslim #hate #India #Report